In today’s data-driven world, the term “big data” is ubiquitous. From businesses to governments, everyone seems to be talking about harnessing the power of big data. But what exactly does it mean? And why is it so important? To truly comprehend this phenomenon, we need to delve into its core characteristics – the 5 V’s of big data. In this blog post, we will unravel these key elements that define and shape the vast ocean of information surrounding us. So fasten your seatbelts as we embark on a journey to understand the 5 V’s of big data and unlock its true potential in revolutionizing industries and transforming our lives.

Introduction to Big Data

Big data is a term that is used to describe the large volume of data – both structured and unstructured – that inundates a business on a day-to-day basis. But it’s not only the amount of data that is important; it’s also the speed at which this data must be processed in order to make timely, informed decisions.

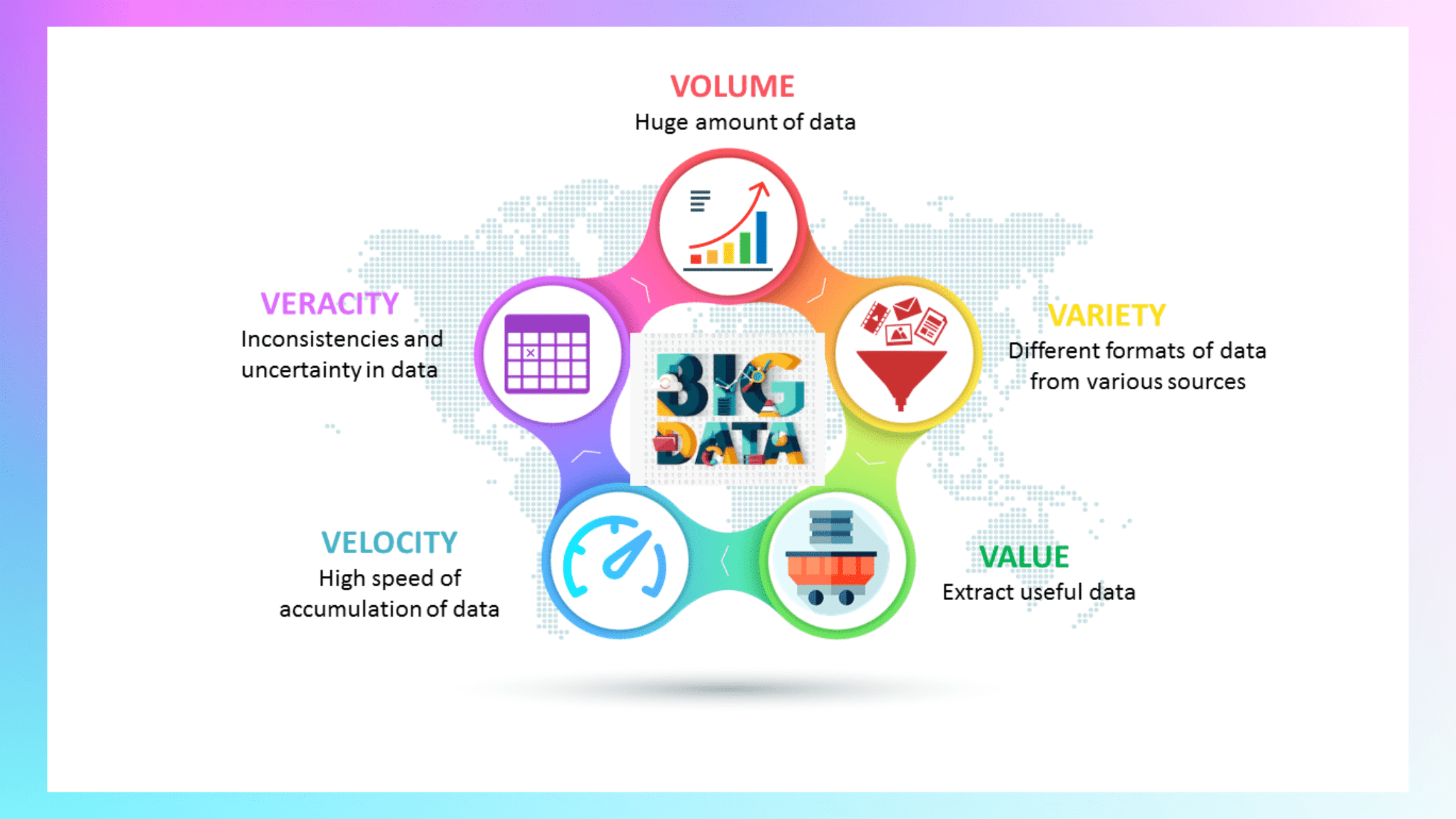

In order to fully understand big data, one must first understand its five defining characteristics, often referred to as the 5V’s: volume, velocity, variety, veracity, and value.

What are the 5 ‘V’s of Big Data?

1. Volume: The amount of data that is being generated is increasing at an unprecedented rate.

2. Variety: The types of data that are being generated are becoming more diverse.

3. Velocity: The speed at which data is being generated is increasing.

4. Veracity: The accuracy of data is often uncertain.

5. Value: The potential value that can be derived from big data analytics can be significant.

Volume: Understanding Big Data’s Size and Scale

1. Volume: Understanding Big Data’s Size and Scale

The first characteristic of big data is its volume. Big data is massive in scale, and it continues to grow at an exponential rate. Every day, we create 2.5 quintillion bytes of data — that’s 2,500,000,000,000,000,000 bytes! And it’s not just humans that are creating all this data. Machines are also generating huge amounts of information, from sensors embedded in our homes and offices to GPS devices tracking our every move.

This deluge of data is only going to increase in the future. According to IBM, by 2020 we will create 44 zettabytes (44 trillion gigabytes) of data per year. That’s the equivalent of every person on earth creating 6 GB of data every day!

To put this into perspective, consider this: if each person on earth took one byte of data each second (which is a very small amount), it would take us 584 years to generate 44 zettabytes of data!

Variety: Exploring the Different Types of Data

There are a variety of different types of data, which can be generally categorized into two main types: structured and unstructured. Structured data is formatted in a specific way and is easy to store, search, and analyze. This type of data is typically found in databases and includes things like customer information, transaction records, and product catalogs. Unstructured data does not have a specific format and can include things like emails, social media posts, videos, images, and sensor data. While this type of data is more difficult to work with, it can contain a lot of valuable information.

Velocity: Capturing and Analyzing Data in Real Time

As our world becomes more and more digitized, the volume of data generated is increasing at an unprecedented rate. Along with this increase in data comes an increase in the need for velocity – the need to capture and analyze this data in real time.

There are a number of ways to achieve velocity, but one of the most important is through the use of streaming data. Streaming data is a type of Big Data that is characterized by its high velocity – it is generated and collected at a very high rate. This means that it can be difficult to store and process streaming data using traditional methods.

That’s where Apache Kafka comes in. Apache Kafka is a distributed streaming platform that provides high-throughput and low-latency streaming of data. It enables you to build real-time applications that can process streams of data as they occur.

Kafka is just one example of how you can achieve velocity with your Big Data. By understanding the need for velocity and utilizing the right tools, you can ensure that your Big Data applications are able to keep up with the fast pace of today’s world.

Veracity: Ensuring Quality and Accuracy of Data

It is important to ensure the quality and accuracy of data when dealing with big data. This can be done in a number of ways, such as verifying the sources of the data, checking for errors and inconsistencies, and using data from multiple sources to cross-check information.

One way to verify the accuracy of data is to check the source. If possible, it is best to use data from primary sources, which are those that collect data firsthand. However, sometimes secondary sources, which use data that has already been collected by someone else, are also used. Checking the reliability of the source is important in both cases.

In addition to verifying the source, it is also important to check for errors and inconsistencies in the data. This can be done by looking at the data itself or by using analytics tools to identify patterns or trends that may indicate errors.

Using data from multiple sources can help to cross-check information and ensure its accuracy. This is because different sources may provide different bits of information about the same thing, which can then be compared and contrasted to find any discrepancies.

Value: Deriving Insights from Big Data

Value is derived from insights that are gleaned from big data. By understanding the patterns and relationships within the data, organizations can make better decisions and improve their performance. To derive value from big data, organizations need to have the right tools and techniques in place to effectively analyze it.

Organizations today are generating more data than ever before. The challenge is not just collecting and storing all this data, but also deriving insights from it that can help improve business decisions and operations. Big data analytics can help organizations uncover hidden patterns, correlations, and other insights that can be used to make better decisions.

To effectively leverage big data, organizations need to have the right tools and techniques in place. They also need to ensure that the data is of high quality and cleansed of any errors or inconsistencies. Otherwise, the insights derived from big data may not be accurate or reliable.

When done correctly, big data analytics can help organizations unlock the value hidden within their data sets. By understanding the patterns and relationships within the data, they can gain valuable insights that can improve their decision-making and performance.

Challenges and Solutions

There are numerous challenges that organizations face when it comes to Big Data. The first challenge is the Variety of data. This can include unstructured data, such as text, images, and audio; semi-structured data, such as XML and JSON; and structured data, such as relational databases. All of this data must be collected, managed, and processed in order to be useful.

The second challenge is the Velocity of data. This refers to the speed at which data is generated and collected. It can be difficult to keep up with the high volume of data being created every day. In addition, this data must be processed quickly in order to be useful.

The third challenge is the Volume of data. This refers to the amount of data that is being generated and collected. Organizations must have the storage capacity and processing power to handle large volumes of data.

The fourth challenge is the Veracity of data. This refers to the accuracy and quality of the data. With so much information being generated from a variety of sources, it can be difficult to ensure that all of the data is accurate and complete.

Fortunately, there are solutions available for each of these challenges. For example, organizations can use Hadoop to store and process large volumes of data quickly and cheaply. In addition, there are many tools available for cleansing and managing data quality. By understanding the challenges involved in Big Data, organizations can better prepare themselves to take advantage of its benefits

Conclusion

By understanding the 5 V’s of Big Data, and how they work together to create a comprehensive view of data analysis, businesses can better understand the value that big data holds for them. With careful consideration of these five variables and an understanding of their capabilities, any business or organization can leverage the power of big data in order to stay ahead in today’s competitive marketplace. As technology advances and more companies embrace big data, its importance will only continue to grow.