Sequential data has become important across numerous fields, from natural language processing (NLP) and time series analysis to biological sequences. Sequential data, inherently ordered, presents unique challenges and opportunities. In NLP, for instance, understanding the context of a sentence requires processing words in sequence. Time series analysis, pivotal in finance and weather forecasting, depends on interpreting data points collected over time. Similarly, biological sequences, like DNA, are fundamental to genetic research and bioinformatics.

Recurrent Neural Networks (RNNs) emerged as a powerful tool for handling such sequential data. Unlike traditional neural networks, RNNs possess an internal state that is influenced by previous inputs, allowing them to maintain contextual information across a sequence. This capability makes RNNs particularly suitable for tasks like language modeling, speech recognition, and sequence prediction.

However, RNNs face significant challenges, notably the vanishing gradient problem. During training, gradients used to update the network’s weights can diminish exponentially as they are propagated backward through time. This issue prevents RNNs from learning long-term dependencies effectively, as information from earlier in the sequence fails to influence the model adequately. To address this, Long Short Term Memory (LSTM) networks were introduced. LSTM in machine learning, a type of RNN, incorporates mechanisms to maintain long-term dependencies, thereby mitigating the vanishing gradient problem. Deep learning advancements, particularly with LSTM networks, have significantly improved the ability to process and analyze sequential data, unlocking new potentials across various applications.

LSTM: A Solution for Long-Term Dependencies

Long Short Term Memory (LSTM) networks are a powerful variant of Recurrent Neural Networks (RNNs) designed to handle long-term dependencies in sequential data. The core architectural advantage of LSTMs over traditional RNNs lies in their memory cells and gating mechanisms. Unlike RNNs, which struggle with the vanishing gradient problem, LSTMs incorporate specialized gates that manage the flow of information.

By selectively remembering and processing information, LSTMs effectively mitigate the vanishing gradient problem, ensuring that important information from earlier in the sequence influences the network’s outputs. This capability makes LSTMs indispensable in various machine learning applications.

Advanced variants like bidirectional long short term memory and convolutional long short term memory networks further enhance the versatility and performance of LSTMs in complex tasks. In the realm of LSTM deep learning and LSTM in machine learning, these networks have significantly advanced the field, making them essential tools for handling sequential data across various domains.

Applications of LSTMs

Long Short Term Memory (LSTM) networks have become a cornerstone in various machine learning tasks, notably machine translation and speech recognition. However, the potential of LSTMs extends far beyond these common applications. In cutting-edge research, LSTMs are being applied in numerous innovative ways, transforming fields like Natural Language Processing (NLP), time series forecasting, generative modeling, and bioinformatics.

Natural Language Processing (NLP)

Use Cases: Sentiment analysis, text summarization, question-answering systems, and dialogue systems.

Advancements: Hybrid models combining LSTMs with attention mechanisms have significantly enhanced the ability to generate coherent and contextually accurate outputs. In particular, deep learning long short-term memory models have advanced tasks like sentiment analysis and text summarization.

- Sentiment Analysis:

-

-

- Application: LSTMs excel in understanding context and capturing the sentiment of text, making them ideal for analyzing sentiments in social media posts, reviews, and articles.

- Advancement: Recent advancements include fine-tuning pre-trained LSTM models on large datasets to improve accuracy in detecting nuanced sentiments and emotions.

-

- Text Summarization:

-

-

- Application: LSTMs are used to generate concise summaries of long documents by identifying and extracting key points.

- Advancement: Hybrid models combining LSTMs with attention mechanisms have significantly enhanced the ability to generate coherent and contextually accurate summaries.

-

- Question Answering Systems:

-

-

- Application: LSTMs help in understanding and generating responses to queries, facilitating more accurate and context-aware question-answering systems.

- Advancement: Integration with transformer models has improved handling of complex language structures and provided more precise answers.

-

- Dialogue Systems:

-

- Application: In chatbots and virtual assistants, LSTMs manage the flow of conversation by maintaining context across multiple dialogue turns.

- Advancement: Advanced dialogue systems now incorporate bidirectional LSTMs to better understand and respond to user inputs in a more human-like manner.

Time Series Forecasting

Use Cases: Financial market predictions, weather forecasting, and anomaly detection.

Advancements: Integration of LSTM models with reinforcement learning techniques has improved adaptability and precision in dynamic conditions. LSTMs capture temporal dependencies in various time series data, making them ideal for financial market predictions and anomaly detection.

- Financial Market Predictions:

-

-

- Application: LSTMs capture temporal dependencies in stock prices and economic indicators, aiding in more accurate financial forecasting.

- Advancement: LSTM models combined with reinforcement learning techniques have improved adaptability and precision in dynamic market conditions.

-

- Weather Forecasting:

-

-

- Application: LSTMs analyze sequential weather data to predict future weather patterns.

- Advancement: The integration of convolutional LSTM networks (ConvLSTMs) allows for better spatial and temporal data processing, enhancing the accuracy of weather forecasts.

-

- Anomaly Detection:

-

- Application: LSTMs detect unusual patterns in time series data, useful in fraud detection, network security, and industrial monitoring.

- Advancement: Advanced LSTM models, such as fuzzy LSTMs, improve the detection of subtle anomalies by incorporating uncertainty handling capabilities.

Generative Modeling

Use Cases: Text generation, music composition, and image captioning.

Advancements: Combining LSTMs with generative adversarial networks (GANs) has led to more realistic and contextually appropriate outputs. The use of convolutional long short term memory networks in generative tasks like image captioning has significantly improved the quality of generated content

- Text Generation:

-

-

- Application: LSTMs are used to generate coherent and contextually relevant text, useful in creative writing and automated content generation.

- Advancement: Combining LSTMs with generative adversarial networks (GANs) has led to more realistic and contextually appropriate text generation.

-

- Music Composition:

-

-

- Application: LSTMs generate music by learning from sequences of musical notes and creating new compositions.

- Advancement: The use of deep learning long short term memory networks has enhanced the ability to capture complex musical patterns, resulting in more intricate and harmonious compositions.

-

- Image Captioning:

-

- Application: LSTMs generate descriptive captions for images by interpreting visual data and generating relevant textual descriptions.

- Advancement: Integration with convolutional neural networks (CNNs) has improved the accuracy and relevance of the generated captions, making them more descriptive and accurate.

Bioinformatics

Use Cases: Protein structure prediction, gene expression analysis, and drug discovery.

Advancements: The incorporation of bidirectional long short term memory and convolutional long short term memory networks has enhanced the ability to capture complex biological patterns. These models are particularly effective in protein structure prediction and gene expression analysis.

- Protein Structure Prediction:

-

-

- Application: LSTMs predict the three-dimensional structures of proteins from their amino acid sequences.

- Advancement: Recent models combine LSTMs with graph neural networks to enhance accuracy in predicting complex protein structures.

-

- Gene Expression Analysis:

-

-

- Application: LSTMs analyze gene expression data to identify patterns and predict gene behavior under various conditions.

- Advancement: The incorporation of bidirectional LSTMs has improved the analysis by capturing dependencies in both directions along the gene sequences.

-

- Drug Discovery:

-

- Application: LSTMs help in predicting the interactions between drugs and their targets, aiding in the discovery of new pharmaceuticals.

- Advancement: Combining LSTMs with reinforcement learning techniques has accelerated the drug discovery process by efficiently exploring chemical space.

LSTMs have transcended their traditional roles, driving innovation across diverse fields. Their ability to manage long-term dependencies and sequential data has unlocked new potentials in NLP, time series forecasting, generative modeling, and bioinformatics, marking significant advancements in each area.

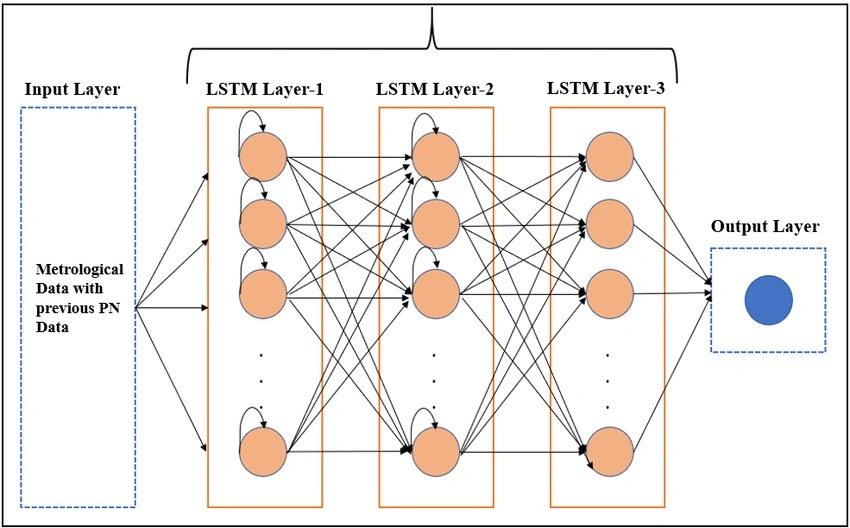

Demystifying LSTM Architecture

Long Short Term Memory (LSTM) networks are a cornerstone of deep learning, particularly well-suited for handling sequential data. Their design addresses the limitations of traditional Recurrent Neural Networks (RNNs) by effectively managing long-term dependencies. In this article, we will delve into the architecture of LSTM cells, explaining their core components and highlighting advanced LSTM variants.

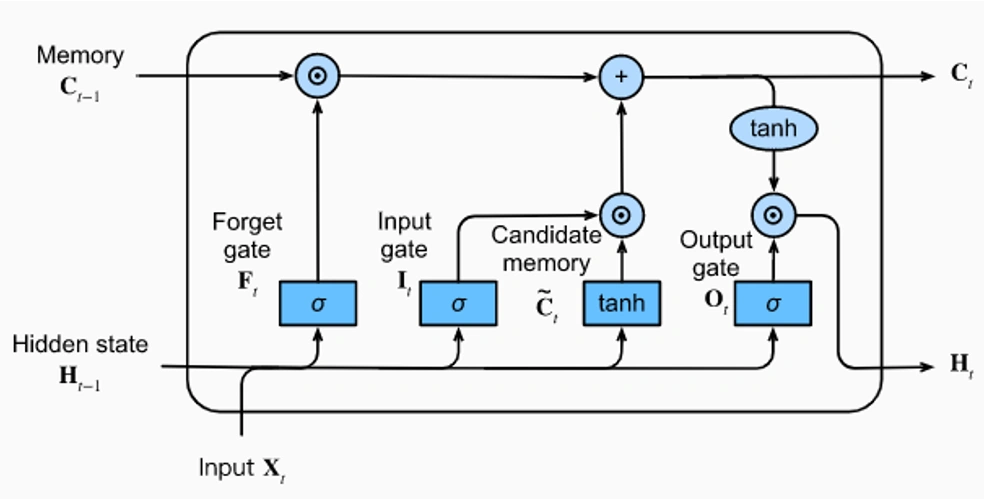

Core Components of an LSTM Cell

-

Memory Cell:

-

-

- Function: The memory cell serves as the long-term storage unit within an LSTM. It retains information over extended periods, enabling the network to remember previous states and carry forward relevant data.

- Role: It is critical for tasks that require understanding context over long sequences, such as language modeling and time series forecasting.

-

-

Forget Gate:

-

-

- Function: The forget gate determines which information from the memory cell should be discarded. It uses a sigmoid activation function to produce values between 0 and 1, where 0 indicates complete forgetting and 1 indicates full retention.

- Role: By selectively forgetting irrelevant data, the forget gate helps in preventing the cell state from being cluttered with unnecessary information.

-

Input Gate:

-

- Function: The input gate regulates the flow of new information into the memory cell. It uses a sigmoid function to control how much of the candidate state should be added to the cell state.

- Role: This gate ensures that only relevant information is updated into the memory cell.

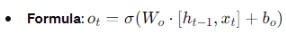

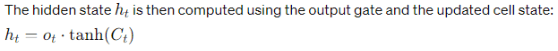

Output Gate:

-

- Function: The output gate decides what part of the cell state should be outputted. It controls the exposure of the internal state to the next layers in the network.

- Role: By filtering the cell state, the output gate helps in producing meaningful outputs based on the current input and the memory of previous states.

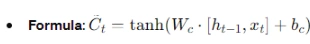

Candidate Gate:

-

-

- Function: The candidate gate generates potential new values for the cell state. These candidates are created using the tanh activation function, which scales the values between -1 and 1.

- Role: The candidate state represents possible new information that might be added to the cell state based on the current input.

-

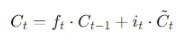

The final cell state is updated by combining the previous cell state, the forget gate’s output, and the input gate’s output with the candidate state:

Advanced LSTM Variants

- Peephole LSTMs:

-

-

- Description: Peephole LSTMs extend the basic LSTM architecture by allowing the gates to have direct connections to the cell state.

- Advantage: This addition enhances the learning capacity by providing the gates with more information about the cell state.

-

- Bidirectional Long Short Term Memory:

-

-

- Description: Bidirectional LSTMs process data in both forward and backward directions, effectively capturing context from both past and future states.

- Advantage: They are particularly useful in applications like speech recognition and language translation, where context from both directions is crucial.

-

- Convolutional Long Short Term Memory:

-

- Description: Convolutional LSTMs integrate convolutional neural networks (CNNs) with LSTMs, enabling spatial feature extraction in addition to temporal feature learning.

- Advantage: This variant excels in tasks involving spatiotemporal data, such as video analysis and image captioning.

LSTM networks, with their intricate gating mechanisms and memory cells, provide a robust framework for learning from sequential data. The advancements in LSTM architectures, such as bidirectional and convolutional LSTMs, further enhance their applicability across diverse deep learning domains. By understanding and leveraging these sophisticated models, one can effectively tackle complex problems in natural language processing, time series analysis, and beyond.

Implementation in Deep Learning:

LSTMs are implemented and trained as part of larger deep learning long short term memory models. For instance, an LSTM can be integrated with convolutional layers, forming convolutional long short term memory networks for tasks like image captioning.

Advanced LSTM Variants:

Bidirectional Long Short Term Memory: Processes information in both forward and backward directions, enhancing context comprehension in natural language processing.

Basic LSTM: Often extended with layers from fully connected deep neural networks to improve performance on complex tasks.

By integrating LSTMs into broader long short term memory networks, their architecture can be tailored to specific tasks, utilizing various deep learning techniques to enhance performance and versatility.

Cutting-Edge Research Advancements in LSTMs

Recent research in LSTM architecture has focused on exploring alternative gating mechanisms, dynamic gating, and hierarchical LSTM structures to improve performance and efficiency. These advancements aim to address specific limitations of traditional LSTMs, such as computational complexity and the vanishing/exploding gradient issues encountered in very deep networks.

Key Trends:

- Alternative Gating Mechanisms: Researchers are experimenting with new gating strategies to enhance the basic LSTM functionality.

- Dynamic Gating: This approach adapts gate functions dynamically based on the context, improving the network’s adaptability.

- Hierarchical LSTM Structures: Implementing multi-level LSTM models to capture different levels of abstraction in sequential data.

Integration with Other Architectures:

- Attention Mechanisms: Combining LSTMs with attention mechanisms has significantly improved their performance in tasks like machine translation and text summarization.

- Fuzzy LSTM: This variant incorporates fuzzy logic principles to handle uncertainty and imprecision, enhancing the robustness of LSTM models.

While these advancements are promising, challenges remain, such as the computational demands and the gradient issues in very deep networks.

Conclusion: The Future of LSTMs

LSTM networks are highly effective in addressing long-term dependencies in sequential data, making them indispensable in various machine learning tasks. Ongoing research focuses on enhancing their performance through alternative gating mechanisms, dynamic gating, and hierarchical structures. Future applications span diverse domains, including natural language processing, time series forecasting, and bioinformatics. To explore further, consider reviewing research papers on LSTM advancements and exploring open-source implementations like TensorFlow and PyTorch, which offer extensive libraries and resources for developing LSTM models. These continuous improvements ensure that LSTMs remain a critical tool in the evolving landscape of deep learning.