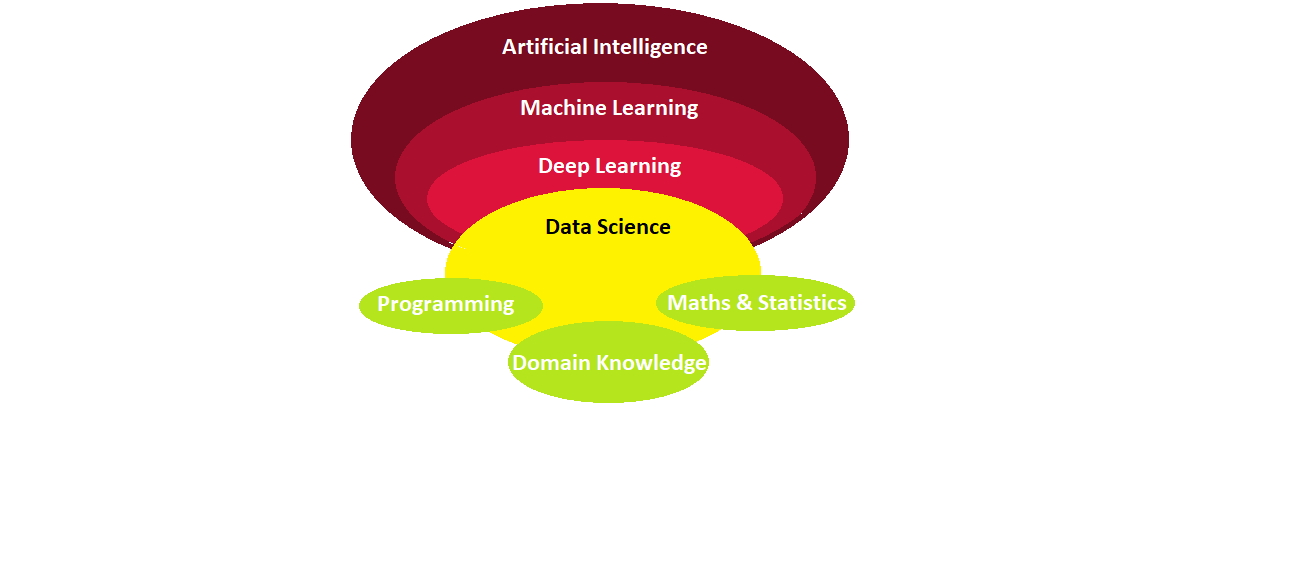

Machine learning (ML) is a powerful branch of artificial intelligence (AI) that allows computers to learn without explicit programming. Instead of following a set of rigid instructions, ML algorithms can analyze data, identify patterns, and make predictions. This article explores the exciting journey of machine learning, from its theoretical beginnings to its real-world applications today.

Teaching a computer to recognize your friends in photos without showing it every single picture is like training it to learn from examples. Imagine you have a bunch of photos where your friends are tagged with their names. The computer looks at these photos and tries to find patterns. For example, it might notice that one friend has blue eyes and curly hair, while another friend has brown eyes and wears glasses.

Using these patterns, the computer starts to guess who’s who in new photos. If it sees someone with blue eyes and curly hair, it might say, “Hey, that looks like Arushi!” But the computer doesn’t always get it right at first. It needs lots of practice and feedback to improve, just like how we learn from our mistakes.

This is where machine learning comes in. It’s like giving the computer a super smart brain that learns from experience. As the computer sees more and more photos and gets feedback on its guesses, it gets better at recognizing your friends. It’s like teaching a robot to be a detective, but instead of clues, it uses pictures!

In the big world of artificial intelligence (AI), machine learning is like the secret sauce that helps AI systems become smarter over time. It’s what makes AI capable of doing amazing things like understanding speech, playing games, and yes, even recognizing your friends in photos. It’s pretty cool how technology can learn and improve, just like we do!

The evolution of machine learning is a captivating story. Early ideas emerged in the 1943s, but limitations in computing power hampered significant progress. With the recent explosion of data and computing muscle, machine learning has taken center stage. Today, it’s used everywhere – from spam filters in your email to recommending movies you might enjoy. Machine learning is constantly evolving, shaping the future of technology and impacting our lives in profound ways.

Early Days: Planting the Seeds (1940s-1980s)

Pioneering the Learning Machine: The fascinating journey of machine learning began in the 1940s and 1950s. Visionary minds like Alan Turing, a pioneer in computer science, laid the groundwork for the concept of a “learning machine.” This machine would be able to adapt and improve its performance without needing explicit programming for every situation.

Inspired by the Brain: Taking inspiration from the human brain’s structure and learning capabilities, researchers like Frank Rosenblatt started developing artificial neural networks. These early models, like the perceptron, were a crucial first step. They could learn from data by adjusting internal connections based on what they “saw.” Imagine showing a perceptron many pictures of cats. By analyzing these images, it could learn to identify cats in new photos, even if it had never seen those specific pictures before.

Limitations and Stepping Stones: Despite these groundbreaking ideas, progress faced hurdles. Computer technology simply wasn’t powerful enough to handle the complex calculations needed for advanced learning algorithms. Additionally, researchers were still grappling with the theoretical underpinnings of how these models should learn most effectively.

Evolutionary Spark: While significant breakthroughs were limited during this period, the early era of machine learning laid the foundation for future advancements. These initial concepts, like the perceptron, served as stepping stones for the development of more sophisticated learning algorithms that would emerge in the years to come.

The concept of a “learning machine” introduced by Alan Turing in the 1940s sparked the evolutionary path of machine learning. However, it wasn’t until the 1990s that the concept of “differential evolution” in machine learning began to take shape. Differential evolution is a type of evolutionary algorithm used for global optimization problems. It mimics the process of natural selection to iteratively improve solutions. This approach has been applied in various fields, including machine learning, to enhance the learning capabilities of algorithms and improve their performance.

The Shift: From Knowledge to Data (1990s-2000s)

The 1990s witnessed a paradigm shift in machine learning. The focus moved from knowledge-based AI to data-driven approaches. Powerful algorithms like Support Vector Machines (SVMs) and decision trees emerged, enabling machines to learn complex patterns from vast amounts of data. This era also saw the rise of statistical learning theory, providing a solid mathematical framework for understanding and improving machine learning algorithms.

The Rise of Deep Learning (2000s-Present)

With the explosion of data and advancements in computing power, the 2000s ushered in the era of deep learning. Deep learning algorithms, inspired by the structure and function of the brain, are essentially artificial neural networks with many layers. These complex models can learn intricate relationships from massive datasets, leading to breakthroughs in areas like image recognition, natural language processing, and machine translation.

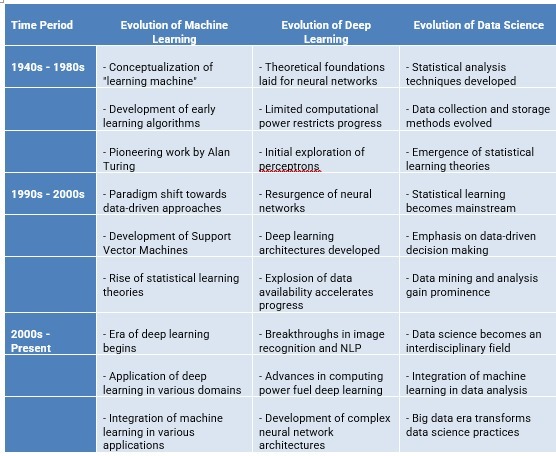

This table summarizes the key milestones and developments in Machine Learning, Deep Learning, and Data Science over different time periods, highlighting the evolution and progression of these fields.

The Future: A World Transformed by Machine Learning

Machine learning is rapidly transforming our world. It is used in various applications, from recommending products online to powering self-driving cars. As machine learning algorithms become more sophisticated and data continues to grow, we can expect even more profound advancements in the years to come.

Beyond the Basics: Exploring Technical Concepts

- Supervised vs. Unsupervised Learning: Machine learning can be categorized into supervised and unsupervised learning. In supervised learning, the model is trained on labeled data, where the desired output is provided. In unsupervised learning, the model identifies patterns in unlabeled data without any predefined classifications.

- Deep Learning Architectures: Deep learning utilizes various architectures like convolutional neural networks (CNNs) for image recognition and recurrent neural networks (RNNs) for sequence data like text and speech.

As technology evolves, machine learning will undoubtedly continue to play a pivotal role in shaping the future.

Machine Learning Evolution

The history of machine learning is a fascinating story of continuous evolution. From the mid-1990s onwards, the field has witnessed several distinct phases, each shaping its capabilities.

Early Simplicity (1995-2005): The initial decade (1995-2005) focused on simpler models like logistic regression and Support Vector Machines (SVMs). These algorithms proved effective for tasks like natural language processing and information retrieval. Their strength lay in their relative ease of training and interpretation compared to more complex models.

Rise of the Neural Network (2005-Present): Around 2005, the machine learning timeline took a significant turn. Advancements in computer vision and the explosion of data availability fueled the resurgence of neural networks. These complex models, inspired by the human brain, boast superior power compared to linear models. However, their intricate structure makes them more challenging to train and deploy, requiring significant computational resources.

The story of machine learning evolution continues to unfold. Researchers are constantly pushing the boundaries, developing new algorithms and refining existing ones. This ongoing pursuit of innovation promises to unlock even greater potential for this transformative field.

Challenges of Neural Networks

- The evolution of deep learning, a powerful subfield of machine learning, has revolutionized many areas. However, this newfound strength comes with its own set of challenges.

- One major hurdle is the immense computational power required for training and evaluating these complex neural networks. The sheer number of calculations involved can strain even the most advanced hardware. This can be a barrier for smaller organizations or applications with limited resources.

- Another challenge lies in the training process itself. Unlike simpler models, deep learning algorithms lack guarantees of finding the absolute best solution. They can get stuck in suboptimal states, hindering their performance. Researchers are constantly working on improving training techniques to mitigate this issue.

- Finally, the evolution of deep learning has introduced complexities in deploying these models across distributed computing systems. Unlike linear models, neural networks are intricate and require specialized techniques for efficient training across multiple machines. This adds another layer of difficulty for real-world applications.

- Despite these challenges, the ongoing evolution of machine learning offers promising solutions. Researchers are actively developing more efficient training algorithms, exploring hardware advancements, and creating better tools for distributed training. As these efforts progress, deep learning is poised to become even more accessible and powerful.

GPUs and their role

The evolution of machine learning received a major boost with the rise of Graphics Processing Units (GPUs). Originally designed for accelerating graphics rendering, GPUs excel at parallel processing, making them ideal for training complex neural networks. These networks require massive amounts of calculations, and GPUs can tackle them simultaneously, significantly reducing training time. This has unlocked new possibilities for developing more powerful and sophisticated AI applications.

Future of Machine Learning

The evolution of machine learning (ML) is poised for another exciting chapter. Neural networks, with their ability to capture complex, non-linear relationships in data, are expected to play a leading role. Unlike simpler linear models, they can handle intricate patterns, making them ideal for tasks like image recognition and natural language processing.

For instance, imagine a self-driving car. A linear model might struggle to account for all the variables on the road – pedestrians, weather conditions, and other vehicles. A neural network, however, can analyze vast amounts of driving data, learning these nuances and making more accurate predictions.

However, the evolution of ML acknowledges the challenges of neural networks. Researchers are actively developing more efficient training algorithms to overcome limitations like getting stuck in suboptimal states. Additionally, advancements in hardware, such as specialized AI chips, are addressing the immense computational demands of these models.

As these efforts progress, the evolution of ML promises to make neural networks even more accessible and powerful. This will undoubtedly lead to a new wave of groundbreaking applications that will continue to reshape our world.

Spark and Machine Learning

While a brief history of machine learning reveals its roots in the 1940s with Frank Rosenblatt’s perceptron, the field has seen explosive growth in recent years. This progress is fueled in part by powerful tools like Apache Spark, a distributed computing framework perfectly suited for large-scale machine learning tasks.

Deterministic vs. Stochastic: A Tale of Two Approaches

The development of machine learning in the 1960s can be separated into two main approaches: deterministic and stochastic. Deterministic approaches, championed by researchers like Vapnik, Chervonenkis, Aizerman, and Yakubovich, focused on finding a separating hyperplane – a line or plane in high dimensions – that could perfectly divide objects belonging to different classes. Imagine classifying emails as spam or not spam. A deterministic approach would aim to create a hyperplane that separates spam emails from legitimate ones with perfect accuracy.

On the other hand, stochastic approaches, with roots in statistical formulations, took a more probabilistic view. Pioneered by researchers like Yakov Tsypkin who introduced the concept of average risk minimization, these approaches focused on minimizing the overall error rate, acknowledging that perfect separation might not always be achievable.

A Setback and a Spark

However, the limitations of the perceptron, exposed in the influential 1969 book by Minsky and Papert, led to a period of decreased funding and research interest, known as the “first winter of AI.” This setback forced the field to re-evaluate its goals and approaches.

Spark Ignited: A New Era for Machine Learning

Fast forward to today, and machine learning is experiencing a renaissance. This progress is fueled in part by powerful tools like Apache Spark. Spark’s secret weapon lies in its in-memory processing capabilities. This allows machine learning algorithms that require multiple passes through the data to run significantly faster. Imagine training a model to recognize objects in images. With Spark, the data can be loaded into memory, analyzed by the algorithm, and then reloaded for further training – all within a single machine or distributed across a cluster. This in-memory processing offers a significant advantage over traditional disk-based methods.

Furthermore, Spark’s machine learning library (MLlib) is under constant development. New features and functionalities are being added at a rapid pace, providing researchers and developers with a rich set of tools to tackle complex problems. This ongoing evolution ensures that Spark remains at the forefront of machine learning advancements.

The synergy between Spark and the ever-evolving field of machine learning is a powerful force. By offering efficient processing power and a continuously expanding toolkit, Spark is poised to propel machine learning to new heights. This will undoubtedly lead to more sophisticated models, faster training times, and ultimately, groundbreaking applications that will reshape our world.

Conclusion

The evolution of machine learning is a captivating story of continuous progress. From its theoretical beginnings in the 1940s to the powerful applications shaping our world today, machine learning has come a long way.

Early researchers laid the groundwork with groundbreaking concepts like the perceptron, paving the way for future advancements. The 1990s witnessed a shift towards data-driven approaches, leading to the development of effective algorithms like SVMs.

The arrival of the 2000s ushered in the era of deep learning. Inspired by the human brain, these complex models revolutionized various fields with their ability to learn intricate patterns from massive datasets.

As we look ahead, the evolution of machine learning shows no signs of slowing down. Researchers are constantly pushing boundaries, addressing challenges like computational demands, and developing more efficient training algorithms. Tools like Spark are further accelerating progress by providing powerful platforms for large-scale machine learning tasks.

The future of machine learning is brimming with potential. With continuous evolution, we can expect even more sophisticated models, faster training times, and groundbreaking applications that will continue to reshape our world in profound ways. This journey of discovery promises to unlock new possibilities and transform the very nature of how we interact with technology.