Docker is an open platform for developing, shipping, and running applications. Docker enables you to separate your applications from your infrastructure so you can deliver software quickly. With Docker, you can manage your infrastructure in the same ways you manage your applications. By taking advantage of Docker’s methodologies for shipping, testing, and deploying code, you can significantly reduce the delay between writing code and running it in production.

Docker is an open platform that allows developers to build, ship, and run applications in a consistent and efficient manner. It plays a crucial role in DevOps practices, particularly in the context of cloud security and deployment automation. Docker in DevOps refers to the integration of Docker containers within DevOps workflows to streamline software development, testing, and deployment processes.

Azure DevOps, a comprehensive set of development tools provided by Microsoft, seamlessly integrates with Docker to enhance DevOps practices. Docker in Azure DevOps enables teams to containerize their applications, automate build and release pipelines, and manage infrastructure as code. This integration empowers developers to deploy containerized applications to Azure cloud environments efficiently.

Furthermore, Docker in DevOps facilitates container orchestration and management using Kubernetes, another popular tool in the DevOps landscape. By leveraging Docker containers alongside Kubernetes clusters, teams can achieve scalability, reliability, and portability for their applications across different cloud providers like AWS.

In terms of cloud security, Docker containers offer isolation and encapsulation of application components, enhancing security posture. Tools like Checkov and Twistlock provide capabilities to scan Docker containers for security vulnerabilities and enforce best practices for container security within Azure DevOps pipelines.

Overall, Docker’s role in DevOps and cloud security is instrumental in enabling organizations to achieve faster delivery, improved scalability, and robust security practices in their software development lifecycle.

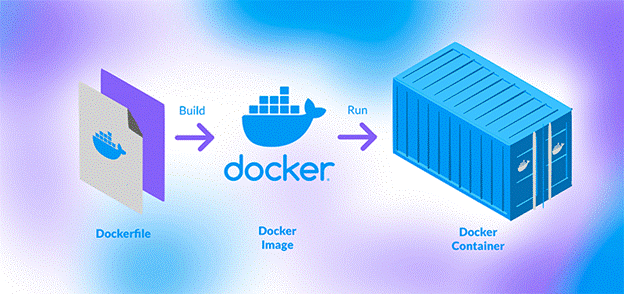

Think of Docker as a magic box where you can put all the things your computer program needs to work, like the code, tools, and other stuff.

- Images: Imagine an image as a snapshot of everything your program needs. It’s like taking a picture of your program and all its stuff so you can use it later.

- Containers: Now, think of a container as a box that holds everything from that picture. You can run this box on any computer, and your program will work exactly the same because it has everything it needs inside this box.

What can I use Docker for?

In DevOps and cloud security, Docker can be a versatile and powerful tool. Here are some ways you can use Docker in these domains:

- Environment Standardization: Docker helps in standardizing development, testing, and production environments. You can create Docker images that contain all the dependencies, libraries, and configurations needed for your applications, ensuring consistency across different environments.

- Continuous Integration/Continuous Deployment (CI/CD): Docker plays a crucial role in CI/CD pipelines. You can use Docker containers to build, test, and deploy applications in an automated and efficient manner. Containers provide isolation, making it easier to manage dependencies and ensure reproducibility in the deployment process.

- Microservices Architecture: Docker is well-suited for implementing a microservices architecture. You can containerize individual microservices, allowing them to be independently developed, deployed, and scaled. Docker’s lightweight nature and resource isolation make it ideal for running multiple microservices on the same host.

- Scalability and Resource Optimization: Docker containers are lightweight and use resources efficiently. They can be quickly scaled up or down based on demand, making it easier to handle varying workloads and optimize resource utilization in cloud environments.

- Security Isolation: Docker provides security isolation between containers and the host system. Each container runs in its own isolated environment, limiting the impact of potential vulnerabilities. Docker also offers features like container image scanning, secure registries, and network policies to enhance security in cloud deployments.

- Portability: Docker containers are highly portable across different platforms and cloud providers. You can build an application in a Docker container locally and then deploy it seamlessly to various cloud environments without worrying about compatibility issues.

- Versioning and Rollback: Docker allows you to version your container images, making it easy to roll back to previous versions if needed. This versioning capability is beneficial for maintaining application stability and troubleshooting issues.

Overall, Docker’s versatility, portability, scalability, and security features make it an invaluable tool in DevOps and cloud security for streamlining development workflows, improving resource utilization, and enhancing application deployment and management processes.

Docker Architecture

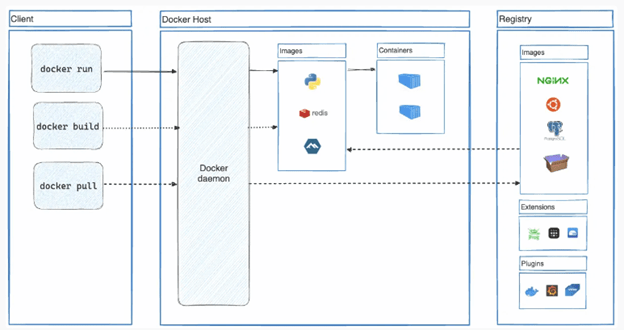

Docker uses a client-server architecture. The Docker client talks to the Docker daemon, which does the heavy lifting of building, running, and distributing your Docker containers. A Docker client and daemon can run on the same system, or you can connect a Docker client to a remote Docker daemon. The Docker client and daemon communicate using a REST API, over UNIX sockets or a network interface. Another Docker client is Docker Compose, which lets you work with applications consisting of a set of containers.

Docker’s architecture is designed around a client-server model, facilitating the management and execution of containerized applications. Here’s a detailed breakdown of Docker’s architecture:

- Client: The Docker client is the primary interface through which users interact with Docker. It accepts commands from users and communicates them to the Docker daemon for execution. Users can issue commands through the Docker command-line interface (CLI) or through Docker’s remote API.

- Docker Daemon: The Docker daemon is a background process responsible for managing Docker objects such as images, containers, networks, and volumes. It handles tasks like building Docker images, creating and running containers, and distributing images to repositories. The daemon listens for commands from the Docker client and executes them accordingly.

- Communication: The Docker client communicates with the Docker daemon using a RESTful API. This communication can occur over UNIX sockets (for local communication within the same system) or network interfaces (for remote communication across different systems). The REST API allows for seamless interaction between the client and daemon, enabling users to manage containers and images efficiently.

- Docker Compose: Docker Compose is a tool that simplifies the management of multi-container applications. It uses YAML configuration files to define the services, networks, and volumes required for an application. Docker Compose allows users to start, stop, and manage multiple containers as a single application stack, streamlining the development and deployment process.

Overall, Docker’s client-server architecture enhances flexibility, scalability, and manageability in containerized environments. It provides a robust framework for building, deploying, and orchestrating container-based applications across different infrastructure environments.

Conclusion

Docker is a transformative technology that revolutionizes application development, deployment, and management in the realms of DevOps and cloud security. It enables developers to encapsulate applications and their dependencies into lightweight, portable containers, fostering environment standardization and consistency across different stages of the development lifecycle. Docker’s integration into Continuous Integration/Continuous Deployment (CI/CD) pipelines automates testing and deployment processes, reducing time-to-market and enhancing efficiency. The microservices architecture benefits from Docker‘s scalability and resource optimization, allowing for independent development and scaling of services. Moreover, Docker’s security features, such as container isolation and image scanning, bolster cloud security by mitigating potential vulnerabilities. Its portability ensures seamless deployment across various platforms and cloud providers, while versioning capabilities facilitate easy rollback and maintenance of application stability. In essence, Docker‘s versatility, portability, scalability, and security enhancements make it an indispensable tool for enhancing development workflows and optimizing resource utilization in DevOps and cloud security environments.