Live Instructor Led Courses

Over 7500+ Candidates Trained

27000+ Hours of Training Delivered

Top categories

Popular Online Categories

Course Created & Designed By

Students Placed In

Explore Job Oriented Popular Courses In India

Explore Most Demanding Courses in Global Market

10% Offer Running - Join Today

Kickstart your Data Science career by opting for our premium NASSCOM & Government of India approved Full Stack Data Science Certified Programs that include tools such as R, Python, SAS, Deep Learning, Artificial Intelligence, Machine Learning, Data Visualization with Tableau & Power BI, Big Data with Hadoop & Spark, SQL & so on and become a Data Science professional in just 6 months.

Frequently Asked Questions

All the tools which are necessary for analytics/ data science profile like programming and data modelling tools (R, Python, SAS), Big data tools (Hadoop, Hive, Pig, Spark, and many more), Visualization and storytelling tools (Tableau, MS Excel etc.) and data management tools (SQL and MS Excel) will be covered in detail. For the topics, please look at the detailed curriculum, however as stated above, everything which is required to start analytics/ data science journey.

The program is designed in modules/ courses where we start with the basics and build up the pace to go to advanced topics. There will be regular classes on weekends, doubt clearing sessions, case studies, projects, regular assessments and a final exam at the end of the course.

We are one of the very few training partners of NASSCOM and the only in North India to provide this kind of training program. A certificate from NASSCOM holds its value in the industry and gives a definite edge to the participants.

4-5 months; 400 hours for the full course, however based on the bundle (selected courses), it can range anywhere from 1 month to 5 months.

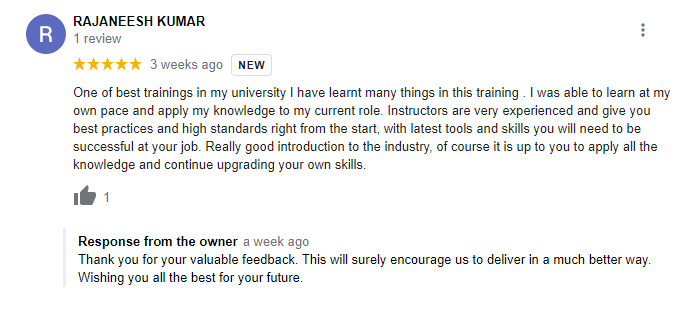

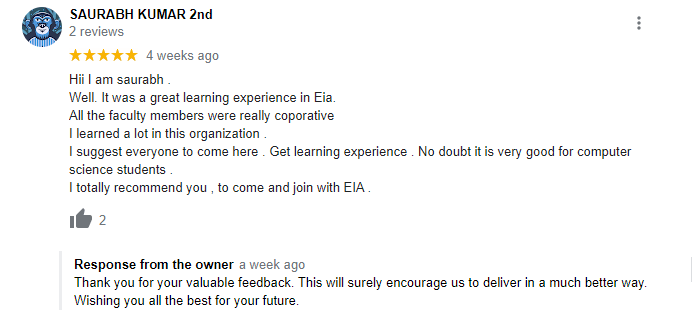

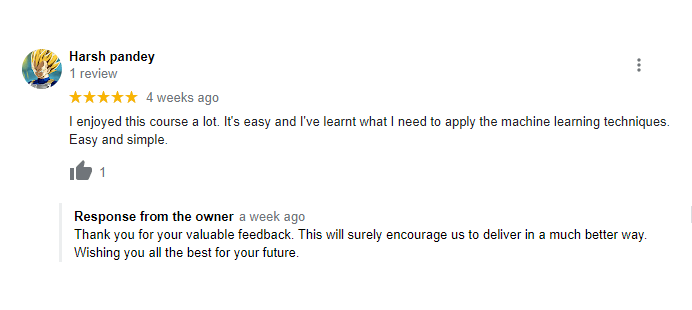

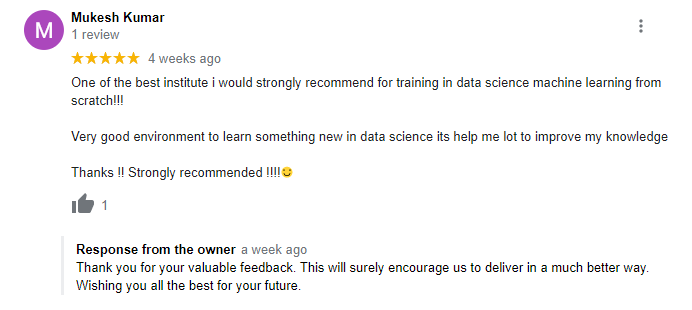

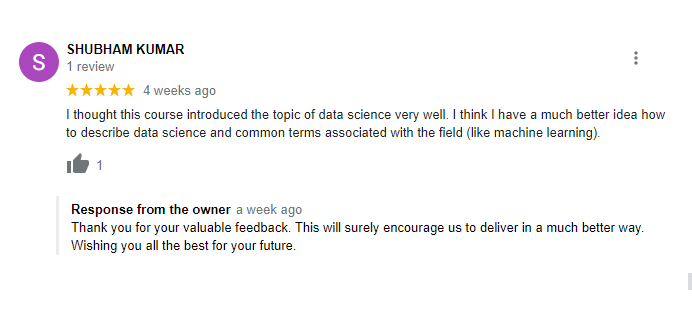

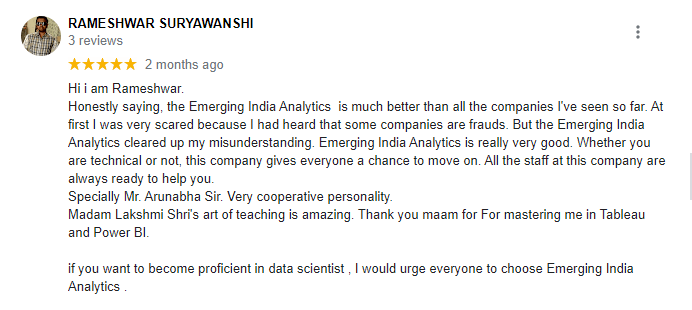

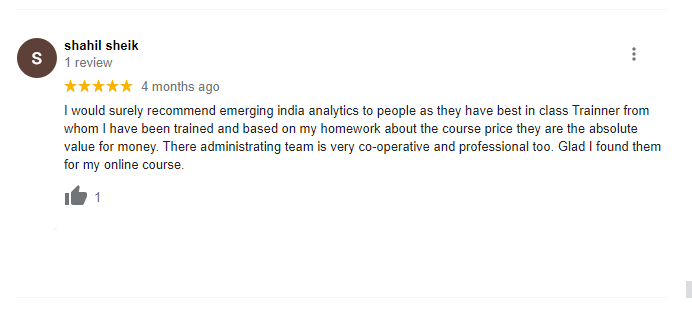

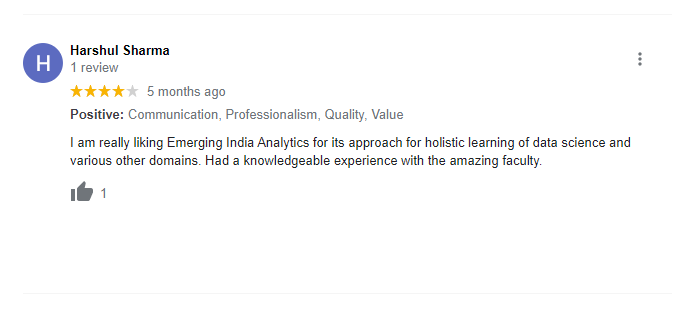

Testimonials

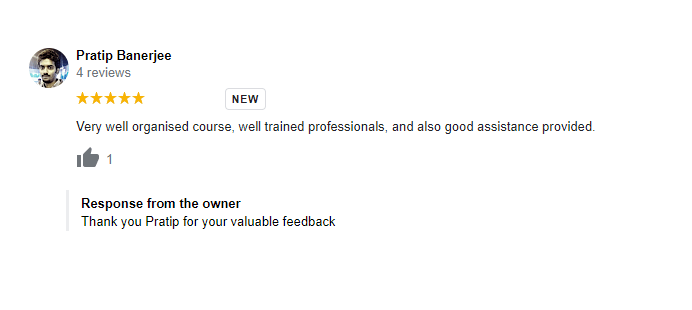

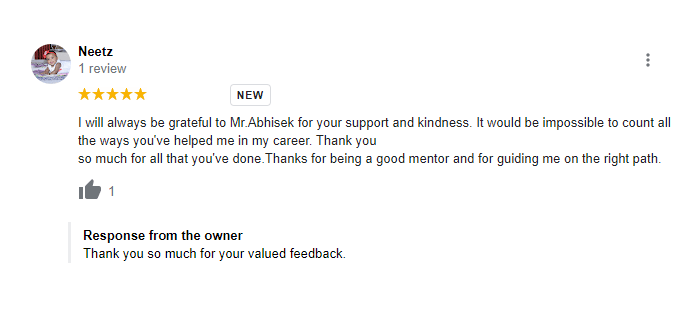

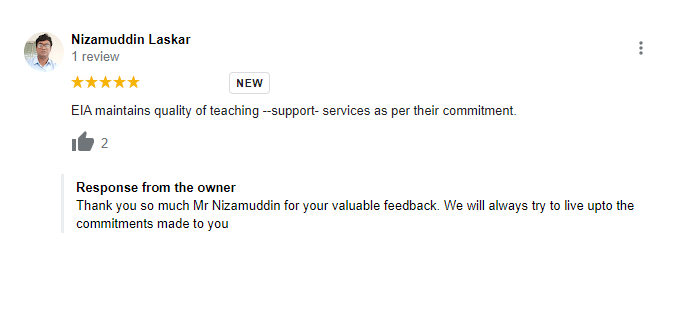

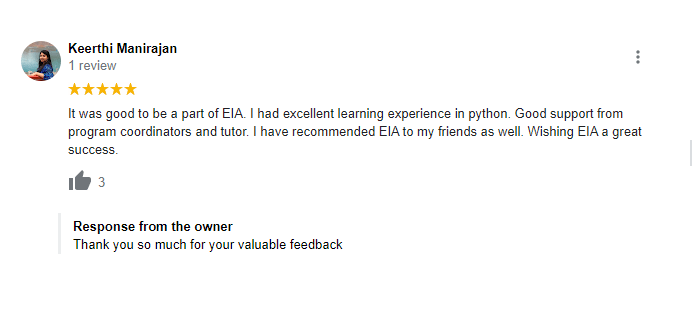

What Students Saying

The way of training is very good and trainer has very patient while explaining,trainers are explaining the concepts from the scratch,so that even the beginners can catch easily,Also trainers are focused on each and every students whether they understood the concepts or not.thanks alot for trainer -abhinay.

Kishore sai

NEWS UPDATE

Latest News & Updates

About Data Science Training Institute in India

Emerging India Analytics is promoted by professionals from IIT’s, IIM’s, MBAs and experts from Education and IT Industry. We are one of the India’s fastest growing Analytics/ IT consulting and training companies. We offer services in both consulting and training domain including NASSCOM certified professional programs (designed to bridge the gap between academics and Industry) and Data Analytics/ Data Engineering/Cyber Security/ IoT/ Robotics/ AI/ Blockchain consulting solutions. We are also proud NASSCOM member and NASSCOM SSC Licensed Training Partner for the Data Science & Machine Learning program for PAN India. We have leveraged data for a lot of businesses and companies to tackle their most challenging and annoying problems to create and add value to them.