Generative Adversarial Networks (GANs) are a class of artificial intelligence algorithms used in machine learning for generating new data instances. They consist of two neural networks, namely the generator and the discriminator, which are trained in an adversarial manner. GANs have gained immense popularity due to their ability to generate realistic data, such as images, music, and text, that closely mimic the original input data.

Importance in Machine Learning and AI: GANs play a crucial role in advancing machine learning and artificial intelligence applications. They enable the creation of synthetic data, which can be used for training models, data augmentation, and enhancing dataset diversity. GANs also contribute to creative applications like image generation, style transfer, and even improving the resolution of images and videos.

What is a Generative Adversarial Network? Generative Adversarial Networks, commonly known as GANs, are deep learning models consisting of a generator and a discriminator network. The generator aims to create synthetic data samples that are indistinguishable from real data, while the discriminator distinguishes between real and fake samples. GANs operate on the principle of competition, where the generator and discriminator networks improve iteratively through adversarial training.

History and Development: The concept of GANs was introduced by Ian Goodfellow and his colleagues in 2014. Since then, GANs have undergone significant development, leading to various improvements and specialized architectures. Deep Generative Adversarial Networks (DAGANs), Wasserstein GANs (WGANs), and Conditional GANs (cGANs) are among the notable advancements in GAN technology.

Why were GANs Developed? The development of GANs stemmed from the need to generate realistic synthetic data for various purposes. GANs were motivated by the desire to overcome limitations in traditional generative models and to address challenges in image generation, data augmentation, and creative content generation. They have found applications in image generation, video synthesis, data generation for training models, and more. So this is a Brief Overview of GANs

Types of GANs:

Generative Adversarial Networks (GANs) have evolved into a spectrum of specialized models, each tailored to address unique challenges and unlock distinct capabilities. Let’s delve into some of the prominent types of GANs:

- Conditional GANs (cGANs):

Conditional GANs introduced the concept of conditioned generation within GANs. They operate based on additional information, such as labels, to guide the Generator in producing specific types of data. For instance, providing a ‘cat’ label results in the generation of cat images, offering controlled data generation and aiding label-specific tasks.

- Deep Convolutional GANs (DCGANs):

DCGANs revolutionized GAN architecture by incorporating convolutional layers in both the Generator and Discriminator. This structural enhancement enables DCGANs to excel in handling image-related tasks efficiently, capturing spatial structures and ensuring stable training dynamics.

- Wasserstein GANs (WGANs):

WGANs introduced a novel loss function, the Wasserstein loss, refining the Discriminator’s assessment of generated data. This improvement significantly enhances training stability, providing a nuanced evaluation of realness/fakeness and offering a smoother training experience.

- CycleGANs:

CycleGANs specialize in image-to-image translation tasks without paired examples. They excel in tasks like style transfer and domain conversion, leveraging cycle consistency loss to maintain structural integrity and ensure accurate image reconstruction.

- Super-Resolution GAN (SRGAN):

SRGANs tackle the challenge of image super-resolution, preserving high-frequency details while enhancing image resolution. By utilizing perceptual loss functions and focusing on high-level features, SRGANs generate visually superior images essential for applications demanding high-resolution visuals.

- Vanilla GAN and StyleGAN:

Vanilla GANs form the foundational model for all GAN variants, showcasing simplicity, versatility, and effectiveness in generating diverse data types. On the other hand, StyleGAN introduces layer-wise style control, noise inputs, stochastic variation, and progressive growing, making it ideal for generating highly detailed and stylized images.

Each GAN variant brings its unique features and advantages, contributing to advancements in image generation, style manipulation, data augmentation, and more. With the diverse arsenal of GANs available, there’s a specialized model catering to every data generation and manipulation need in the realm of machine learning and AI.

Architecture of GANs

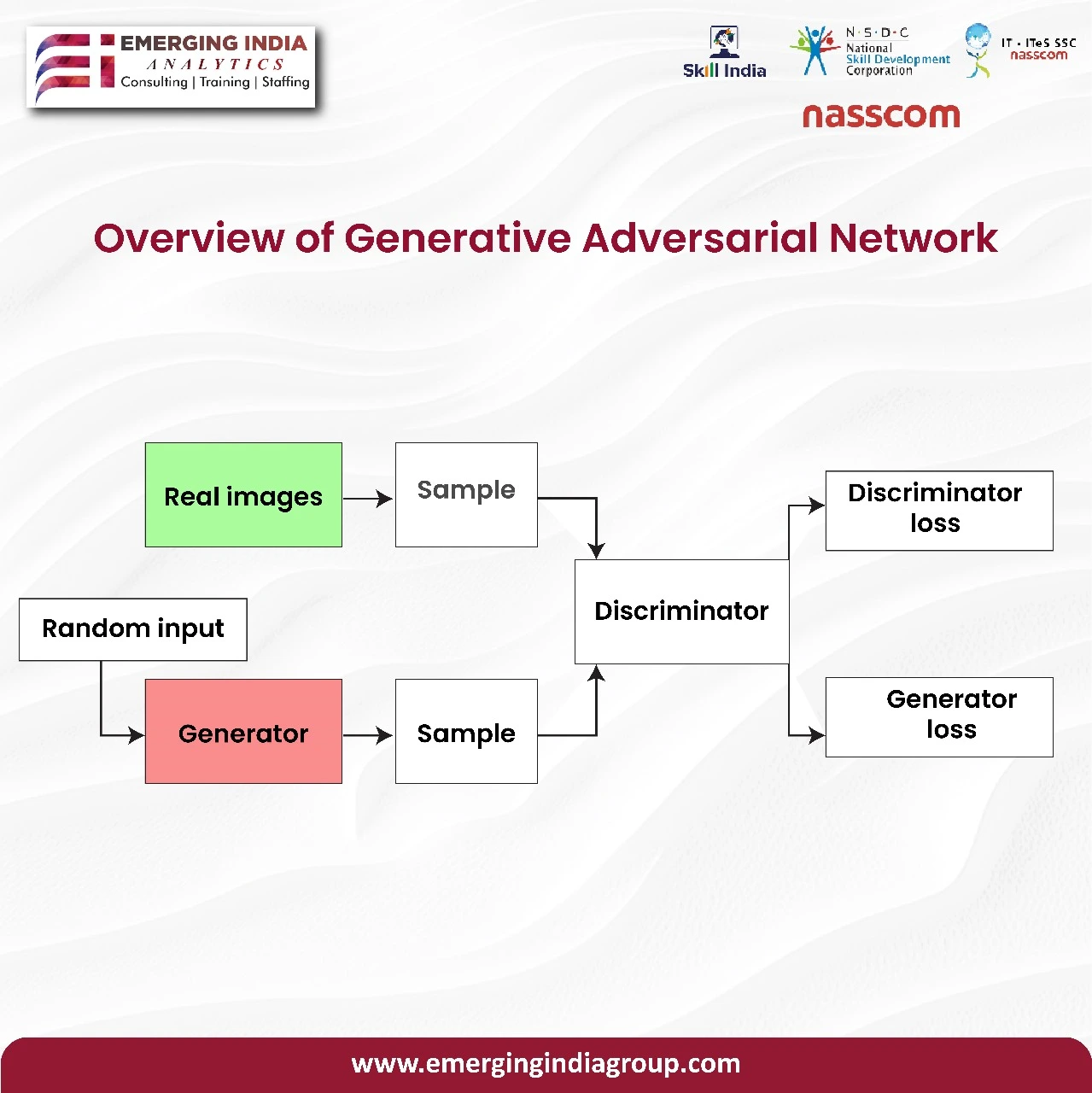

The architecture of Generative Adversarial Networks (GANs) revolves around two key components: the Generator and the Discriminator, each playing a crucial role in the adversarial learning process.

- Generator:

The Generator in GANs operates akin to a “thief” in a competitive scenario. It is a convolutional neural network (CNN) designed to generate synthetic data that closely resembles real data. The generator’s primary objective is to produce outputs that are indistinguishable from authentic data. It begins with a fixed-length random vector, known as a noisy input vector, which serves as the initial input. Over time, through the adversarial training process, the Generator learns to create data instances that mimic the true data distribution.

Key Components of Generator:

- Noisy Input Vector: Represents the random input provided to the Generator.

- Generator Network: Transforms the noisy input into a meaningful data instance.

- Discriminator Network: Evaluates and classifies the generated data as real or fake.

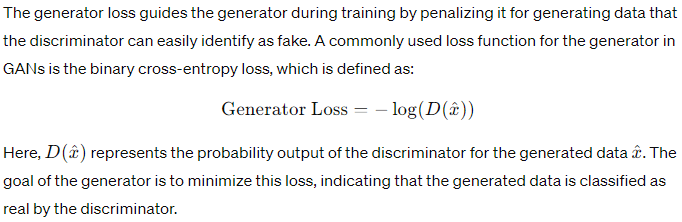

- Generator Loss: Guides the Generator by penalizing it for generating data that the Discriminator can easily identify as fake.

- Discriminator:

Contrary to the Generator, the Discriminator acts as the “police” in the GAN architecture. It is a deconvolutional neural network responsible for distinguishing between real and generated data samples. The Discriminator’s role is to correctly identify whether an input data sample is authentic or generated by the Generator. Through iterative training, the Discriminator refines its ability to classify data accurately, contributing to the adversarial learning process.

Key Components of Discriminator:

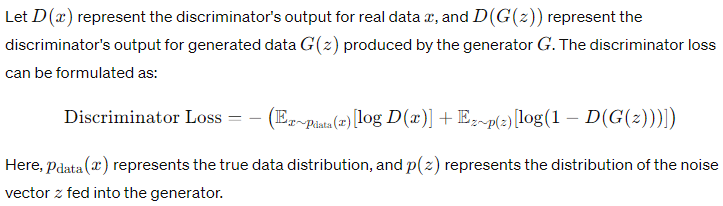

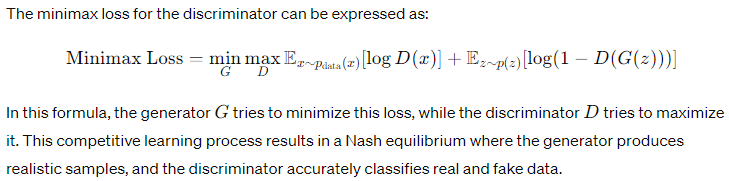

- Discriminator Loss: Measures the Discriminator’s ability to classify real and fake data correctly. It penalizes misclassifications to improve the Discriminator’s accuracy.

- Minimax Loss Formula: Represents the optimization objective where the Discriminator aims to minimize the likelihood of misclassification.

How GANs Work:

The working principle of GANs revolves around adversarial learning, characterized by a competitive interplay between the Generator and Discriminator. The iterative process involves:

- Initialization: Random weights are assigned to initialize both the Generator and Discriminator.

- Training Iterations: The Generator generates data samples, while the Discriminator distinguishes between real and fake samples.

- Adversarial Learning: The Generator adjusts its weights to produce more convincing samples, aiming to fool the Discriminator. Simultaneously, the Discriminator refines its weights for accurate classification.

- Convergence: The training continues until equilibrium is achieved, where the Generator generates data indistinguishable from real data, and the Discriminator struggles to differentiate between real and fake samples.

Implementation of GAN

A Python code snippet demonstrates the implementation of GANs using PyTorch library, showcasing the creation and training of Generator and Discriminator models to generate synthetic data.

Here’s an example of implementing Generative Adversarial Networks (GANs) using the PyTorch library in Python. The code consists of creating and training both the Generator and Discriminator models to generate synthetic data.

# Step-1 : Import necessary libraries

import torch

import torch.nn as nn

import torch.optim as optim

import torchvision

from torchvision import datasets, transforms

import matplotlib.pyplot as plt

import numpy as np

# Set device

device = torch.device(‘cuda’ if torch.cuda.is_available() else ‘cpu’)

# Step-2: Define data transformations

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))

])

# Step-3: Load and preprocess the dataset

train_dataset = datasets.CIFAR10(root=’./data’, train=True, download=True, transform=transform)

dataloader = torch.utils.data.DataLoader(train_dataset, batch_size=32, shuffle=True)

# Step-4: Set up model parameters

latent_dims = 100

learning_rate = 0.0002

beta_1 = 0.5

beta_2 = 0.999

num_epochs = 5

# Step-5: Create Generator class

class Generator(nn.Module):

def __init__(self, latent_dims):

super(Generator, self).__init__()

self.model = nn.Sequential(

nn.Linear(latent_dims, 128 * 8 * 8),

nn.ReLU(),

nn.Unflatten(1, (128, 8, 8)),

nn.Upsample(scale_factor=2),

nn.Conv2d(128, 128, kernel_size=3, padding=1),

nn.BatchNorm2d(128, momentum=0.78),

nn.ReLU(),

nn.Upsample(scale_factor=2),

nn.Conv2d(128, 64, kernel_size=3, padding=1),

nn.BatchNorm2d(64, momentum=0.78),

nn.ReLU(),

nn.Conv2d(64, 3, kernel_size=3, padding=1),

nn.Tanh()

)

def forward(self, z):

img = self.model(z)

return img

# Step-6: Create Discriminator class

class Discriminator(nn.Module):

def __init__(self):

super(Discriminator, self).__init__()

self.model = nn.Sequential(

nn.Conv2d(3, 32, kernel_size=3, stride=2, padding=1),

nn.LeakyReLU(0.2),

nn.Dropout(0.25),

nn.Conv2d(32, 64, kernel_size=3, stride=2, padding=1),

nn.ZeroPad2d((0, 1, 0, 1)),

nn.BatchNorm2d(64, momentum=0.82),

nn.LeakyReLU(0.25),

nn.Dropout(0.25),

nn.Conv2d(64, 128, kernel_size=3, stride=2, padding=1),

nn.BatchNorm2d(128, momentum=0.82),

nn.LeakyReLU(0.2),

nn.Dropout(0.25),

nn.Conv2d(128, 256, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(256, momentum=0.8),

nn.LeakyReLU(0.25),

nn.Dropout(0.25),

nn.Flatten(),

nn.Linear(256 * 5 * 5, 1),

nn.Sigmoid()

)

def forward(self, img):

validity = self.model(img)

return validity

# Step-7: Build the Generative Adversarial Network architecture

generator = Generator(latent_dims).to(device)

discriminator = Discriminator().to(device)

# Loss function

adversarial_loss = nn.BCELoss()

# Optimizers

optimizer_G = optim.Adam(generator.parameters(), lr=learning_rate, betas=(beta_1, beta_2))

optimizer_D = optim.Adam(discriminator.parameters(), lr=learning_rate, betas=(beta_1, beta_2))

# Step-8: Train the GAN model

# Training loop

for epoch in range(num_epochs):

for i, batch in enumerate(dataloader):

# Convert list to tensor

real_images = batch[0].to(device)

# Adversarial ground truths

valid = torch.ones(real_images.size(0), 1, device=device)

fake = torch.zeros(real_images.size(0), 1, device=device)

# Configure input

real_images = real_images.to(device)

# ———————

# Train Discriminator

# ———————

optimizer_D.zero_grad()

# Sample noise as generator input

z = torch.randn(real_images.size(0), latent_dims, device=device)

# Generate a batch of images

fake_images = generator(z)

# Measure discriminator’s ability

real_loss = adversarial_loss(discriminator(real_images), valid)

fake_loss = adversarial_loss(discriminator(fake_images.detach()), fake)

d_loss = (real_loss + fake_loss) / 2

# Backward pass and optimize

d_loss.backward()

optimizer_D.step()

# —————–

# Train Generator

# —————–

optimizer_G.zero_grad()

# Generate a batch of images

gen_images = generator(z)

# Adversarial loss

g_loss = adversarial_loss(discriminator(gen_images), valid)

# Backward pass and optimize

g_loss.backward()

optimizer_G.step()

# ———————

# Progress Monitoring

# ———————

if (i + 1) % 100 == 0:

print(

f”Epoch [{epoch+1}/{num_epochs}] Batch {i+1}/{len(dataloader)} “

f”Discriminator Loss: {d_loss.item():.4f} “

f”Generator Loss: {g_loss.item():.4f}”

)

# Save

if (epoch + 1) % 5 == 0:

with torch.no_grad():

z = torch.randn(16, latent_dims, device=device)

generated = generator(z).detach().cpu()

grid = torchvision.utils.make_grid(generated, nrow=4, normalize=True)

plt.imshow(np.transpose(grid, (1, 2, 0)))

plt.axis(“off”)

plt.show()

Explanation of Implementation:

- Imports and Setup:

- Import necessary libraries like PyTorch, torchvision, and matplotlib.

- Set the device to GPU if available, else CPU.

- Define data transformations for preprocessing the dataset.

- Load and Preprocess Dataset:

- Load the CIFAR10 dataset and apply transformations.

- Create a data loader for batch processing.

- Model Setup:

- Define model parameters such as latent dimensions, learning rate, and optimizer settings.

- Create the Generator and Discriminator classes using PyTorch’s nn.Module.

- Training Loop:

- Iterate through the dataset batches for a specified

Application of Generative Adversarial Networks (GANs)

These are the following applications of GANs:

Real-world Applications of GANs

Generative Adversarial Networks (GANs) have found diverse applications across various domains. One prominent application is image generation, where GANs are used to create realistic images from scratch. For example, in the field of computer graphics, GANs are employed to generate lifelike scenes and characters.

Another critical use case is data augmentation. GANs can generate synthetic data that mimics real data, aiding in expanding training datasets and improving model generalization. This is particularly beneficial in scenarios where obtaining large amounts of labeled data is challenging.

Advantages of GANs

The benefits of using GANs in AI and machine learning are manifold. One advantage is their ability to generate diverse and high-quality data, which can enhance the performance of models trained on limited datasets. GANs also enable unsupervised learning, allowing models to learn from unlabelled data and discover underlying patterns autonomously.

In deep learning tasks, GANs contribute to model enhancement by providing novel data samples for training. This can lead to more robust and accurate models, especially in areas like image classification and natural language processing.

Disadvantages of GANs

Despite their advantages, GANs come with certain challenges. One common issue is training instability, where the generator and discriminator struggle to reach equilibrium during training, leading to oscillations in model performance. Mode collapse is another challenge, where the generator produces limited variations of outputs, reducing diversity in generated samples.

In tasks such as image generation and data augmentation, ensuring diversity and avoiding overfitting are ongoing challenges when using GANs. Additionally, GANs require careful hyperparameter tuning and monitoring to prevent issues like vanishing gradients and gradient explosions.

Challenges Faced by Generative Adversarial Networks (GANs)

Common challenges in using GANs include convergence issues, where the model fails to converge to a stable solution, and evaluation difficulties, as assessing the quality of generated samples can be subjective. Mode dropping, where the generator focuses on a few modes of the data distribution, is another challenge that affects the diversity of generated outputs.

To overcome these challenges, researchers and practitioners are exploring novel architectures such as conditional GANs and cycle-consistent GANs, implementing regularization techniques, and enhancing training strategies to improve GAN stability and performance.

Conclusion

In conclusion, Generative Adversarial Networks (GANs) have revolutionized the field of AI and machine learning by enabling realistic data generation, model enhancement, and unsupervised learning capabilities. Despite facing challenges like training instability and mode collapse, ongoing research and advancements in GAN technology hold promise for addressing these issues and unlocking new possibilities in data-driven applications.