Welcome to the exciting world of neural networks, where machines learn like humans! In recent years, deep learning has taken over the tech industry by storm, revolutionizing everything from image recognition to speech synthesis. But did you know that this cutting-edge technology has its roots in a simple mathematical model known as perceptrons? Join us on a journey through time and discover how neural networks have evolved from their humble beginnings to become one of the most promising fields in artificial intelligence today. Get ready for an insightful exploration into the fascinating history and future of machine learning!

Introduction to Neural Networks

In 1957, Frank Rosenblatt proposed the first neural network algorithm, the perceptron. The perceptron was a single-layer neural network that could be trained to classify input data. This was a major breakthrough at the time, as it showed that computers could be used to learn from data.

However, the perceptron had its limitations. It could only solve linear problems and was unable to learn complex patterns. In 1969, Marvin Minsky and Seymour Papert published a book called “Perceptrons” which detailed the limitations of the perceptron algorithm. As a result of this work, research on neural networks stalled for many years.

It wasn’t until 1986 that neural networks made a comeback with the introduction of the backpropagation algorithm by Rumelhart, Hinton, and Williams. With backpropagation, neural networks were able to learn non-linear problems and became much more powerful.

Neural networks are now a major research area with many different architectures being proposed. Some of the most popular include convolutional neural networks (CNNs) and recurrent neural networks (RNNs). CNNs are well-suited for image classification tasks while RNNs are well-suited for sequence modeling tasks such as language translation.

There is still much research to be done in this field and new architectures are being proposed all the time. But one thing is for sure, neural networks have come a long way since their humble beginnings in the 1950

History and Evolution of Neural Networks

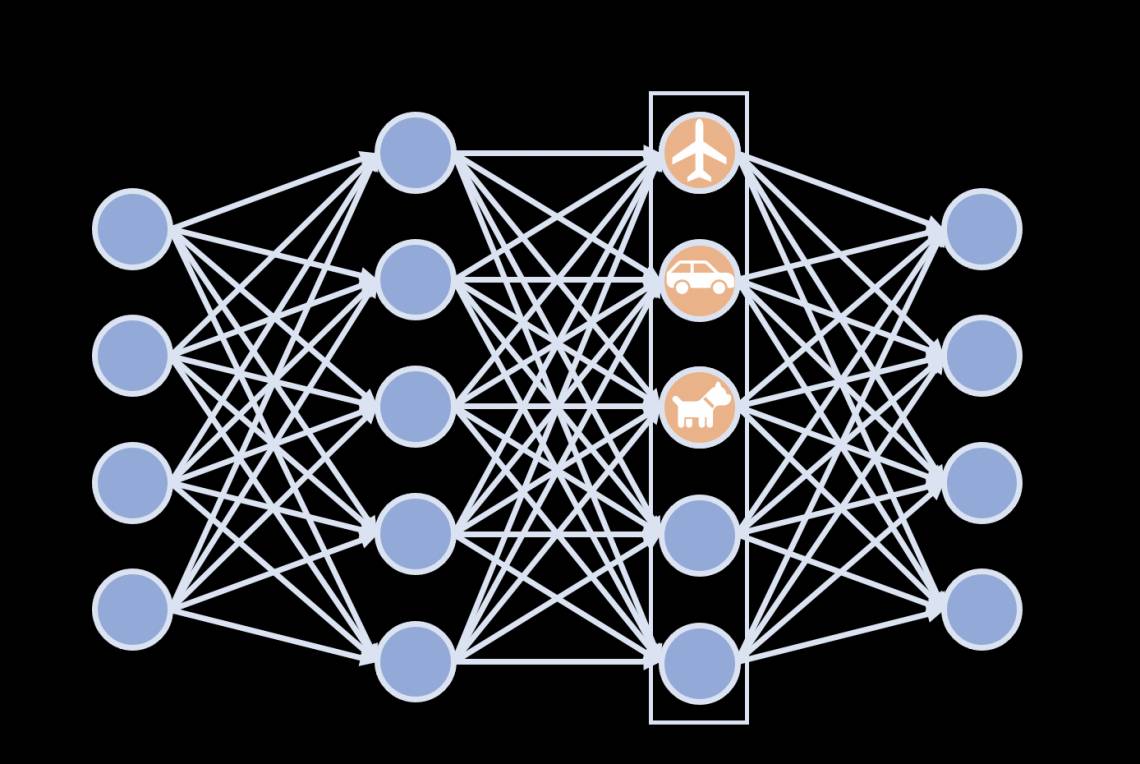

Neural networks are a type of artificial intelligence that are modeled after the brain. They are composed of a large number of interconnected processing nodes, or neurons, that can learn to recognize patterns of input and produce corresponding outputs.

Neural networks have been around since the 1950s, but they have undergone a significant evolution in recent years. Early neural networks were limited in their ability to learn and generalize from data. This changed with the introduction of backpropagation in the 1980s, which allowed neural networks to train on larger datasets and achieve greater accuracy.

With the advent of deep learning in the early 2010s, neural networks once again saw a major boost in their capabilities. Deep learning is a technique for training neural networks that uses multiple layers of interconnected processing nodes. This allows for more complex patterns to be learned and recognized by the network.

Today, neural networks are being used for a variety of tasks, including image recognition, natural language processing, and even self-driving cars. As they continue to evolve, it is likely that we will see even more amazing applications for this powerful artificial intelligence tool.

How do Neurons Work?

Neurons are nerve cells that transmit information throughout the body. They are the basic unit of the nervous system and are responsible for everything from processing sensory information to coordinating movement.

Neurons are made up of a cell body, which contains the nucleus, and a number of dendrites and axons. Dendrites are short, branching extensions that receive signals from other neurons, while axons are long, single extensions that carry signals away from the cell body.

When a neuron receives a signal, it passes that signal down its dendrites to the cell body. If the signal is strong enough, it will trigger an electrical impulse in the cell body, which then travels down the axon to the next neuron. This process repeats itself until the signal reaches its destination.

Artificial Neural Networks Explained

Artificial neural networks are modeled after the brain and nervous system. They are composed of neuron-like nodes, which are connected by synapses. These networks can learn to recognize patterns of input data and make predictions based on those patterns.

Neural networks have been around for decades, but they have only recently gained popularity due to advances in computing power and data storage. Deep learning is a type of neural network that is particularly well-suited for big data applications. Deep learning networks are composed of many layers of nodes, which makes them more effective at learning complex patterns than shallow neural networks.

What is Deep Learning?

Deep learning is a subset of machine learning in which algorithms are used to model high-level abstractions in data. Deep learning is often used to improve the performance of other machine learning algorithms.

Deep learning algorithms are based on artificial neural networks (ANNs), which are themselves based on the brain’s structure and function. ANNs are made up of layers of interconnected nodes, or neurons, that process information in a similar way to the brain.

The first ANN was created in 1943 by Warren McCulloch and Walter Pitts. Their work was later extended by Frank Rosenblatt, who developed the perceptron, an early type of neural network.

In the 1980s, connectionism, a branch of cognitive science that explores the idea that mental processes can be described as networks of interconnected nodes, began to gain popularity. This led to renewed interest in neural networks and their potential for artificial intelligence (AI) applications.

In 1986, David Rumelhart and Geoffrey Hinton published a paper titled “Learning Representations by Back-propagating Errors,” which introduced the backpropagation algorithm for training neural networks. This algorithm allowed for much more accurate predictions by ANNs and paved the way for modern deep learning methods.

Today, deep learning is used for a variety of tasks including image classification, object detection, facial recognition, and natural language processing (NLP).

Types of Deep Learning Algorithms

There are three main types of deep learning algorithms: supervised, unsupervised, and reinforcement learning.

Supervised learning is where the algorithm is given a set of training data, which includes both input data and desired output labels. The algorithm then learns to map the input data to the output labels. This type of algorithm is often used for tasks such as image classification and handwriting recognition.

Unsupervised learning is where the algorithm is only given input data, without any desired output labels. The algorithm then has to learn to find structure in the data on its own. This type of algorithm is often used for tasks such as si

Challenges & Limitations of Deep Learning

Deep learning has been incredibly successful in a wide range of applications, from image classification to machine translation. However, deep learning models are often opaque, making it difficult to understand why they work the way they do. Additionally, deep learning models can be extremely data-hungry, requiring large amounts of training data in order to generalize well. Deep learning models are often brittle, meaning that small changes to the input data can cause the model to make large mistakes.

Applications of Deep Learning

Deep learning has revolutionized many industries in recent years, with applications in computer vision, natural language processing, and recommender systems.

One of the most well-known applications of deep learning is computer vision. Deep learning algorithms can be trained to automatically recognize objects in images, identify faces, and even read handwritten text. This technology is used in self-driving cars, security systems, and image search engines.

Natural language processing is another area where deep learning has made significant advances. Deep learning algorithms can be used to automatically translate between languages, understand the sentiment of text, and generate new text from scratch. This technology is used in machine translation applications such as Google Translate, chatbots, and voice recognition systems.

Recommender systems are a third area where deep learning has been applied successfully. Deep learning algorithms can be used to predict what a user wants to buy or watch based on their past behavior. This technology is used by companies such as Amazon and Netflix to recommend products and videos to their users.

Conclusion

Neural networks have come a long way since the first perceptron in 1957. With advances in technology, deep learning and artificial intelligence are becoming increasingly powerful tools for solving complex problems. We are now able to create neural networks that can learn, improve, and make decisions with unprecedented levels of accuracy and speed. The possibilities for applications of these technologies are only beginning to be explored, making this an exciting time for anyone interested in machine learning or AI research.